Artificial Intelligence (AI) is rapidly transforming various aspects of our lives and industries. It encompasses a wide range of technologies that enable machines to perform tasks that typically require human intelligence. From automating routine processes to driving innovation, AI's potential is vast and continues to expand. This page explores some of the key areas where AI is making a significant impact.

Key Applications of Artificial Intelligence

Automation and Efficiency

AI excels at automating repetitive and time-consuming tasks, freeing up human workers for more creative and strategic endeavors. This includes robotic process automation (RPA), automated customer service through chatbots, and streamlining workflows across different industries.

Data Analysis and Insights

AI algorithms can process and analyze massive datasets far more efficiently than humans. This capability allows for the extraction of valuable insights, identification of trends, and the creation of predictive models, leading to better decision-making in fields like finance, marketing, and scientific research.

Natural Language Processing (NLP)

NLP enables computers to understand, interpret, and generate human language. This powers applications like virtual assistants (e.g., Siri, Alexa), language translation services, sentiment analysis, and advanced search engines.

Computer Vision

Computer vision allows AI systems to "see" and interpret images and videos. This technology is crucial for applications such as facial recognition, autonomous vehicles, quality control in manufacturing, and medical image analysis.

Machine Learning and Prediction

Machine learning, a subset of AI, involves training algorithms on data to enable them to learn and make predictions or decisions without being explicitly programmed. This is used in recommendation systems (e.g., Netflix, Amazon), fraud detection, and predictive maintenance.

Healthcare Advancements

AI is revolutionizing healthcare through applications like diagnostic tools, drug discovery, personalized medicine, robotic surgery, and virtual health assistants, leading to more accurate diagnoses and improved patient outcomes.

Transportation and Logistics

AI is at the forefront of advancements in transportation, including the development of autonomous vehicles, intelligent traffic management systems, and optimized logistics and supply chain management.

Creative Industries

Increasingly, AI is being used as a tool in creative fields, assisting with tasks such as generating music, creating art, writing content, and designing products.

Personalization and Recommendations

AI algorithms power personalized experiences across various platforms, from recommending products based on your shopping history to tailoring content feeds on social media and streaming services.

These are just some of the many ways artificial intelligence is being used today, and its potential continues to grow as the technology evolves. Understanding these applications can help us appreciate the transformative power of AI and its impact on our future.

Types of Artificial Intelligence and Key Providers

Artificial intelligence can be broadly categorized based on its capabilities and functionalities. Understanding these different types helps in appreciating the current state of AI and its future potential.

Types of AI by Capability

- Artificial Narrow Intelligence (ANI) or Weak AI: This is the only type of AI that currently exists. ANI is designed and trained for a specific task. Examples include voice assistants like Siri and Alexa, facial recognition systems, and recommendation algorithms.

- Artificial General Intelligence (AGI) or Strong AI: AGI is a theoretical form of AI with the ability to understand, learn, and apply knowledge across a wide range of tasks, much like a human. Currently, AGI does not exist.

- Artificial Superintelligence (ASI): ASI is also theoretical and would surpass human intelligence in all aspects, including creativity, problem-solving, and general knowledge.

Types of AI by Functionality

- Reactive Machines: These are the most basic AI systems that react to present stimuli based on pre-programmed rules. They do not have memory of past experiences. An example is IBM's Deep Blue, which could play chess.

- Limited Memory AI: These systems can use past data to make decisions, improving over time. Most of today's AI applications, including self-driving cars and recommendation systems, fall into this category.

- Theory of Mind AI: This is a theoretical type of AI that would understand that others (humans, other AI) have beliefs, desires, and intentions that affect their behavior. It would be able to interact socially.

- Self-Aware AI: This is a further theoretical stage where AI would have consciousness, self-awareness, and an understanding of its own existence.

Key AI Providers

Numerous companies and organizations are at the forefront of developing and providing AI technologies and services. Here are some notable providers across different areas of AI:

- Large Language Models and Generative AI: OpenAI (ChatGPT, DALL-E), Google (Gemini, Bard), Anthropic (Claude), Meta AI (Llama), Mistral AI.

- Cloud-based AI Platforms: Amazon Web Services (AWS AI), Microsoft Azure AI, Google Cloud AI.

- AI Hardware (GPUs): NVIDIA, AMD.

- AI Research and Development: DeepMind (Google), IBM, various university research labs.

- AI Applications and Solutions: Many companies offer AI-powered solutions for specific industries like healthcare (e.g., Artera), finance, and transportation.

This is not an exhaustive list, as the field of artificial intelligence is rapidly evolving with new players and innovations emerging constantly.

Understanding the Development of Large Language Models (LLMs)

Developing a Large Language Model like Llama involves several complex stages, from data collection to model deployment. Here's a simplified overview of the process and the key components involved in creating such sophisticated AI models.

1. Data Collection and Preprocessing

The foundation of any LLM is a massive dataset of text and code. This data is collected from various sources, including books, articles, websites, and code repositories. The quality and diversity of this data are crucial for the model's performance.

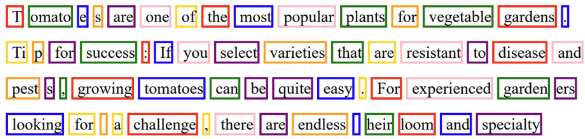

Once collected, the data undergoes extensive preprocessing. This includes:

- Cleaning: Removing irrelevant information, errors, and inconsistencies.

- Tokenization: Breaking down the text into smaller units called tokens (words, subwords, or characters) that the model can process.

- Normalization: Converting text to a consistent format (e.g., lowercase).

2. Model Architecture

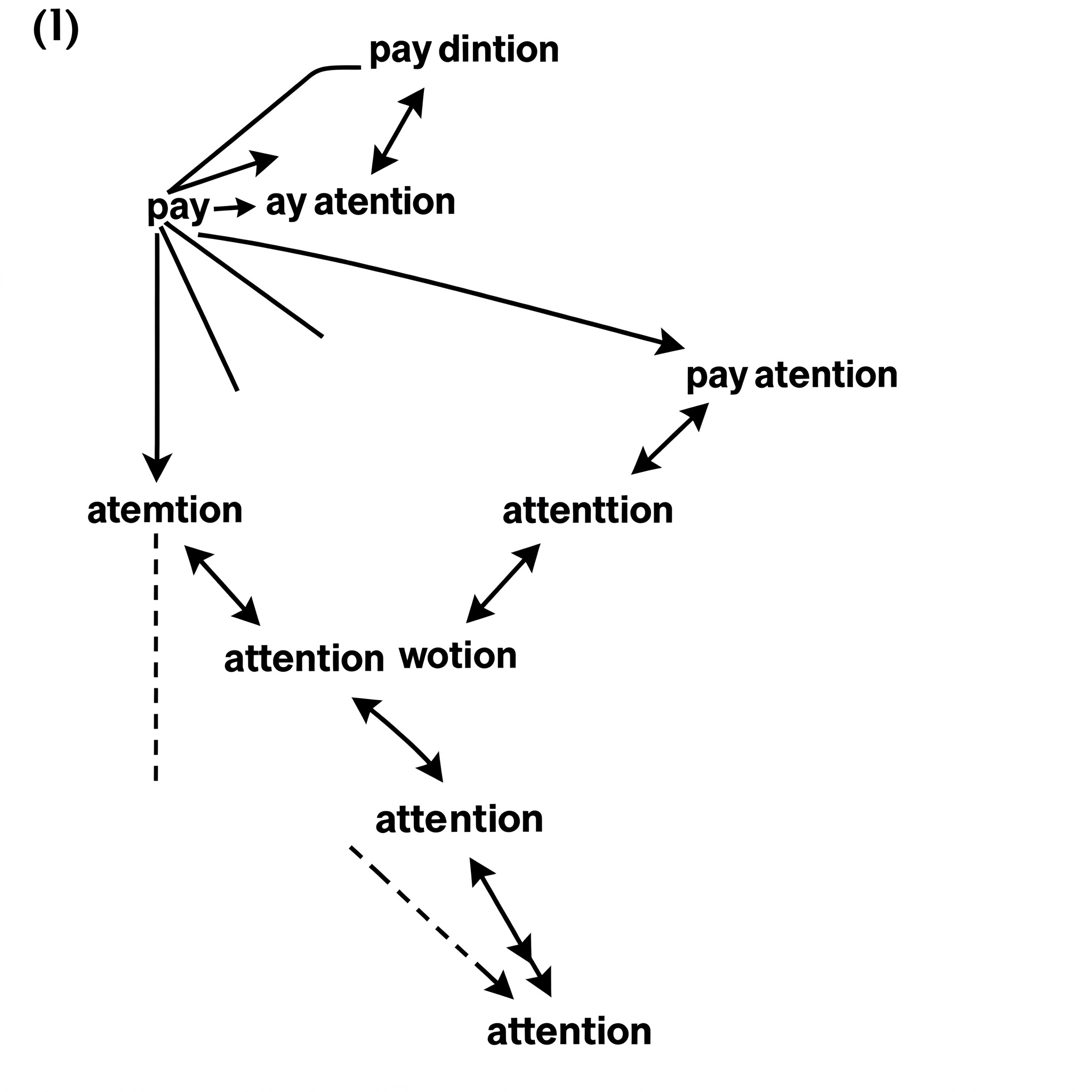

The architecture of an LLM defines how it processes and learns from the data. Modern LLMs are primarily based on the Transformer architecture. Key components of the Transformer include:

- Attention Mechanisms: These allow the model to weigh the importance of different parts of the input sequence when processing it.

- Multi-Head Attention: Multiple attention mechanisms working in parallel to capture different relationships in the data.

- Feed-Forward Networks: Layers that process the attended information.

- Encoder and Decoder (for some architectures): While decoder-only architectures are common for LLMs, the original Transformer had both an encoder (to understand the input) and a decoder (to generate the output).

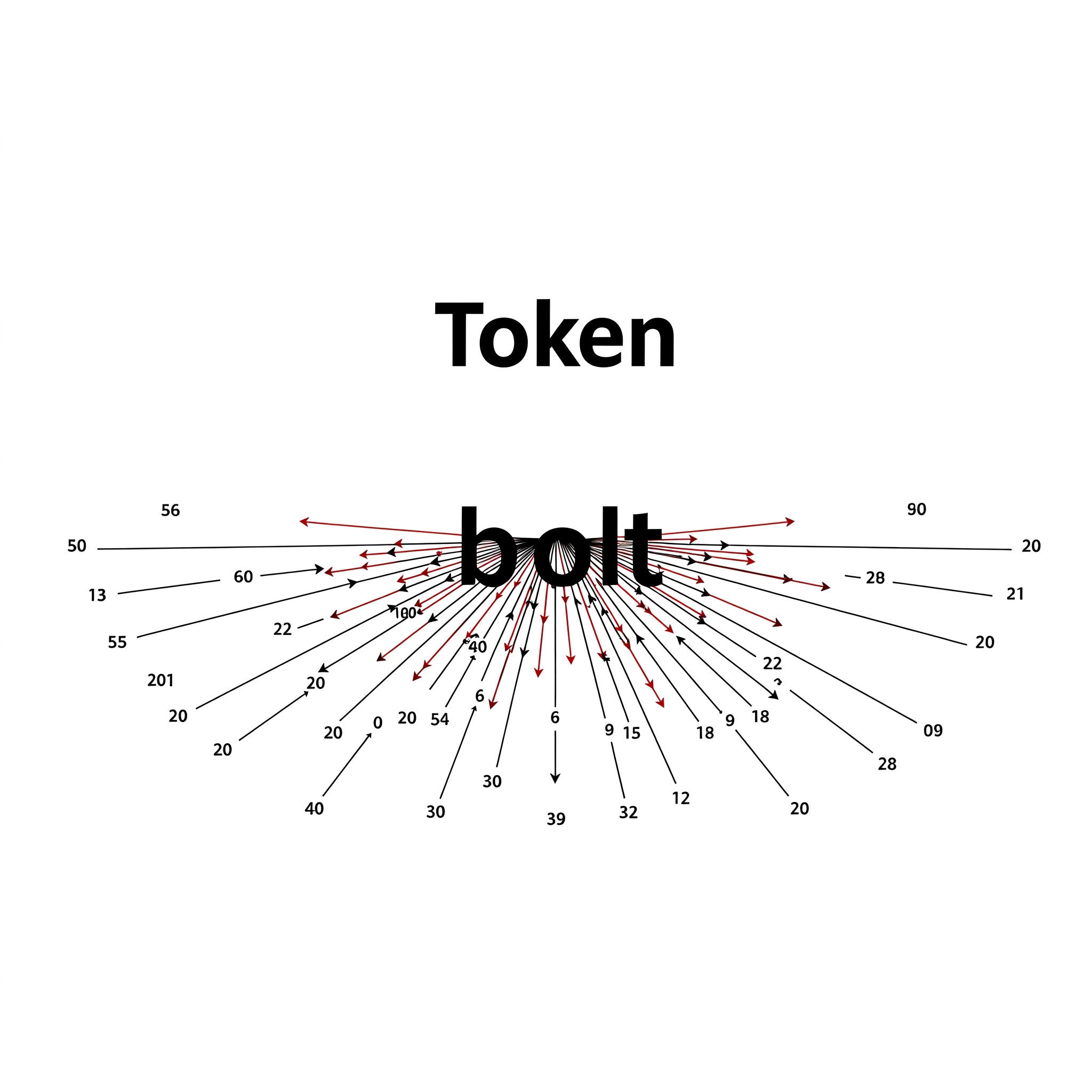

- Embedding Layers: These convert tokens into numerical vectors that the model can understand. (A simple illustration showing a token being converted into a vector could be useful.)

3. Model Training

Training an LLM involves feeding the preprocessed data into the model and adjusting its internal parameters (weights) over millions or even billions of iterations. This is typically done using a technique called self-supervised learning, where the model learns to predict the next token in a sequence.

This training process requires immense computational resources, often utilizing thousands of high-end GPUs for extended periods. Techniques like distributed training are employed to speed up the process.

4. Evaluation and Fine-tuning

After the initial training, the model is evaluated on various benchmark datasets to assess its performance on different tasks. Based on the evaluation results, the model might undergo fine-tuning. Fine-tuning involves training the model further on smaller, task-specific datasets to improve its performance on particular applications (e.g., question answering, text summarization).

5. Deployment

Once the model meets the desired performance levels, it can be deployed for use in various applications. This might involve hosting the model on cloud infrastructure, integrating it into software applications, or even running it locally (as discussed in the Llama installation guide).

The development of LLMs is a continuous process, with ongoing research focused on improving model architecture, training techniques, efficiency, and addressing challenges like bias and factual accuracy.